What Are You Looking For?

In the past year, the status of liquid cooling in the intelligent computing industry has escalated rapidly. It has transitioned from a "nice-to-have" option to a "must-have" mandate. In almost every forum discussing AI computing power, liquid cooling is described as the only solution, creating a narrative that if you aren't talking about liquid cooling, you don't understand the future.

However, at Coolnet, we believe this consensus is forming faster than the industry's ability to digest it.

While the thermal density of modern GPUs (like the NVIDIA Blackwell series) makes liquid cooling physically necessary, treating it as a magic wand ignores significant engineering hurdles. Is liquid cooling being overhyped? The answer lies not in the technology itself, but in the gap between "technical feasibility" and "industrial maturity."

The Shift from Component Replacement to System Governance

Industry experts at the recent Green AI Infra Forum (IDCC2025) pointed out a crucial fact: Liquid cooling is not new. From combustion engines to IBM mainframes of the 1960s, fluid heat transfer is a proven path.

The challenge today is scalability and verifiability.

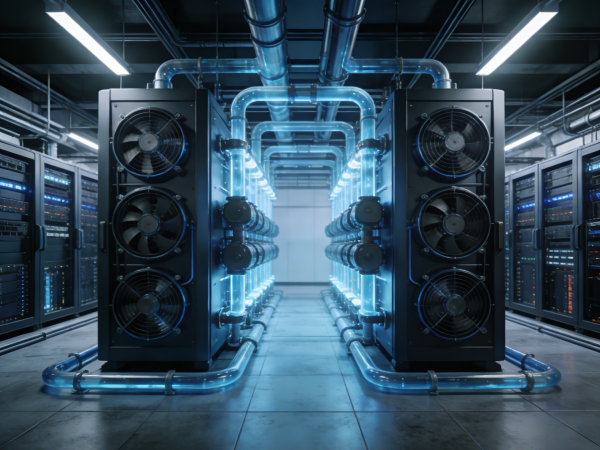

In the era of traditional air cooling, the boundary between the "Facility" (Coolnet’s domain) and "IT Equipment" (Server domain) was clear. The facility provided cold air; the server consumed it.

Liquid cooling blurs this line. The cooling system now extends directly onto the chip. This shift turns data center construction from a procurement task into a complex system engineering challenge. It is no longer about just buying a Precision Air Conditioner; it is about managing fluid dynamics, material science, and pressure control across the entire lifecycle.

In marketing brochures, Power Usage Effectiveness (PUE) is often cited as the ultimate metric for liquid cooling. However, for data center operators and Coolnet’s engineering teams, PUE is only part of the story.

The real conversation is about TCO (Total Cost of Ownership) and Reliability.

For an operations team, the nightmare isn't a slightly higher electricity bill—it's a coolant leak. A single leakage incident can cause downtime and hardware damage that wipes out years of energy savings. The risk involves cold plates, manifolds, joints, and valves. Ensuring these components remain sealed under pressure for 5-10 years is a massive engineering hurdle.

Liquid cooling isn't just "water." It often involves deionized water, propylene glycol, or dielectric fluids.

Hidden Costs: These fluids degrade. They require regular testing for pH balance and biological growth (bacteria).

Supply Chain: High-quality propylene glycol is significantly more expensive than standard alternatives and often relies on complex international supply chains.

As noted by industry veterans, while liquid cooling lowers HVAC energy consumption, the savings don't always translate to net profit when you factor in the high cost of Modular Data Center integration and specialized fluid maintenance.

A common myth is that liquid cooling "simplifies" the data center by removing fans. In reality, it introduces a new layer of chemical and hydraulic complexity.

Material Compatibility: The interaction between the fluid and the metal pipes (copper, aluminum, stainless steel) must be perfectly managed to prevent galvanic corrosion.

Talent Gap: Traditional data center technicians are experts in airflow and electrical systems (like our UPS Power Systems). Liquid cooling requires knowledge of fluid mechanics and chemistry. The industry is currently facing a shortage of personnel qualified to maintain these hybrid systems.

Liquid cooling doesn't remove complexity; it transfers it from the air ducts to the piping.

So, is liquid cooling overhyped?

Yes, and No. It is "overhyped" as a simple, drop-in replacement that solves all problems. It is, however, "undervalued" as a strategic restructuring of the data center.

At Coolnet, we view this not as a trend to blindly follow, but as an engineering standard to master. Whether you are deploying a hybrid air-liquid solution or a fully immersed system, the key is Reliability.

For the foreseeable future, the industry will likely see a Hybrid Era:

Air Cooling (supported by efficient In-Row cooling) for standard IT loads.

Liquid Cooling (Direct-to-Chip) for high-density AI clusters.

The winners in this era won't be those who chase the lowest PUE on paper, but those who build infrastructure that balances efficiency with safety, maintainability, and cost control.

Discussion: Do you think the operational risks of liquid cooling (leaks, maintenance) outweigh the energy savings in your current projects? Join the conversation on our [LinkedIn Page].

Explore Coolnet Solutions: